BDD-style Acceptance Testing

Related Article: SAST & DAST in the Pipeline

This section is a guided tour through the concrete artifacts of the example repository in https://github.com/my-ellersdorfer-at/my-stuff. It is not a full code listing. Instead, it explains why each artifact exists, what problem it solves, and how it supports fast, high-quality development.

The repository is intentionally structured like a real-world web product: backend, frontend, tests at multiple levels, and CI automation.

As the book reinforces, the whole project is classic TDD territory: - tests define behavior, - production code follows, - the design elaborates through tests.

The system remains easy to change, the code becomes modular, loosely coupled and cohesive, so changes are possible without interfering with peripheral complexities.

Repository Root — Orientation

At the root of the repository you will find:

domain/application/acceptance/ui-acceptance/ng-frontend/- CI configuration (

.github/workflows) - Infrastructure support (

docker-compose.yaml,certs/,keystore/) for a local development setup. - Shared build configuration (

pom.xml)

This separation is deliberate:

- business rules live in domain

- delivery mechanisms live in application

- behavior validation lives outside both

This prevents accidental complexity and keeps both feature development and refactoring safe.

Domain Module — Business Rules First

Purpose

The domain module contains:

- Entities (e.g., Asset)

- Interactors/use cases (e.g., AssetInteractor)

- Domain-level validation and invariants

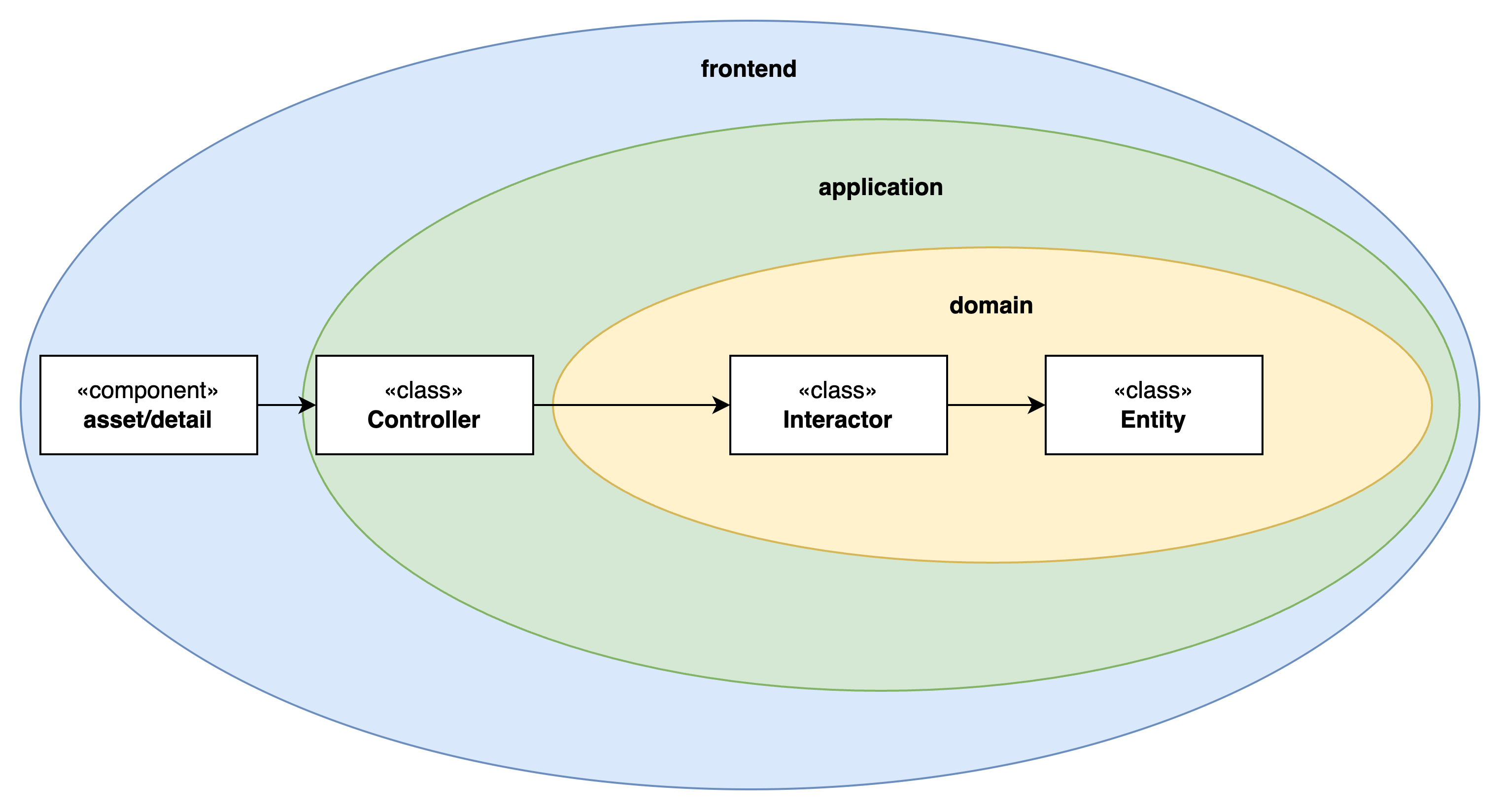

The domain module encapsulates all business logic, the system consists of. It represents all the application dependent, as well as application independent business logic. I deliberately chose to minimize the use of any framework or library in the domain module. That is because, as the domain represents the business rules, business should not depend on technicalities and frameworks.

-

Entity - the application independent business rules. Entities represent real world business rules an objects, that exist also without software. Entities have no dependency to external types and are the most technically stable types of the system. They are designed to change solely on purpose.

-

Interactor - the application dependent business rules, exist to automate interaction with the entities. Each public method can be understood as use case in the system, exposed to the users of the system. When the set of use cases grows over time, it can easily be split into use case classes and hence would act as Facade to the automation of the system. The interactor, like all other types inside the domain layer must not depend from types and definitions, apart from the language, of other layers of the system. This kind of design reinforces the DIP (Dependency Inversion Principle).

Location

domain/

└── src

├── main/java/...

└── test/java/...

Tests

domain/src/test/java/.../entity/AssetTest.java

domain/src/test/java/.../interactor/AssetInteractorTest.java

These tests: - run fast, - require no framework bootstrapping, - define what must always be true.

Application Module — Delivery, Not Business Logic

Purpose

The application module wires: - HTTP controllers, - serialization, - authentication/authorization, - integration with the domain layer and provides the execution environment.

Location

application/

└── src

├── main/java/...

│ └── web/AssetController.java

└── test/java/...

└── web/AssetControllerTest.java

{blurb, class: information}

The rule is simple:

Controllers translate requests into domain calls — nothing more.

Tests

Controller tests verify: - request/response mapping, - HTTP status codes, - security constraints, - error handling.

They do not re-test domain rules. That separation keeps tests focused and cheap.

{pagebreak}

System Architecture

Acceptance Module — Executable Specifications

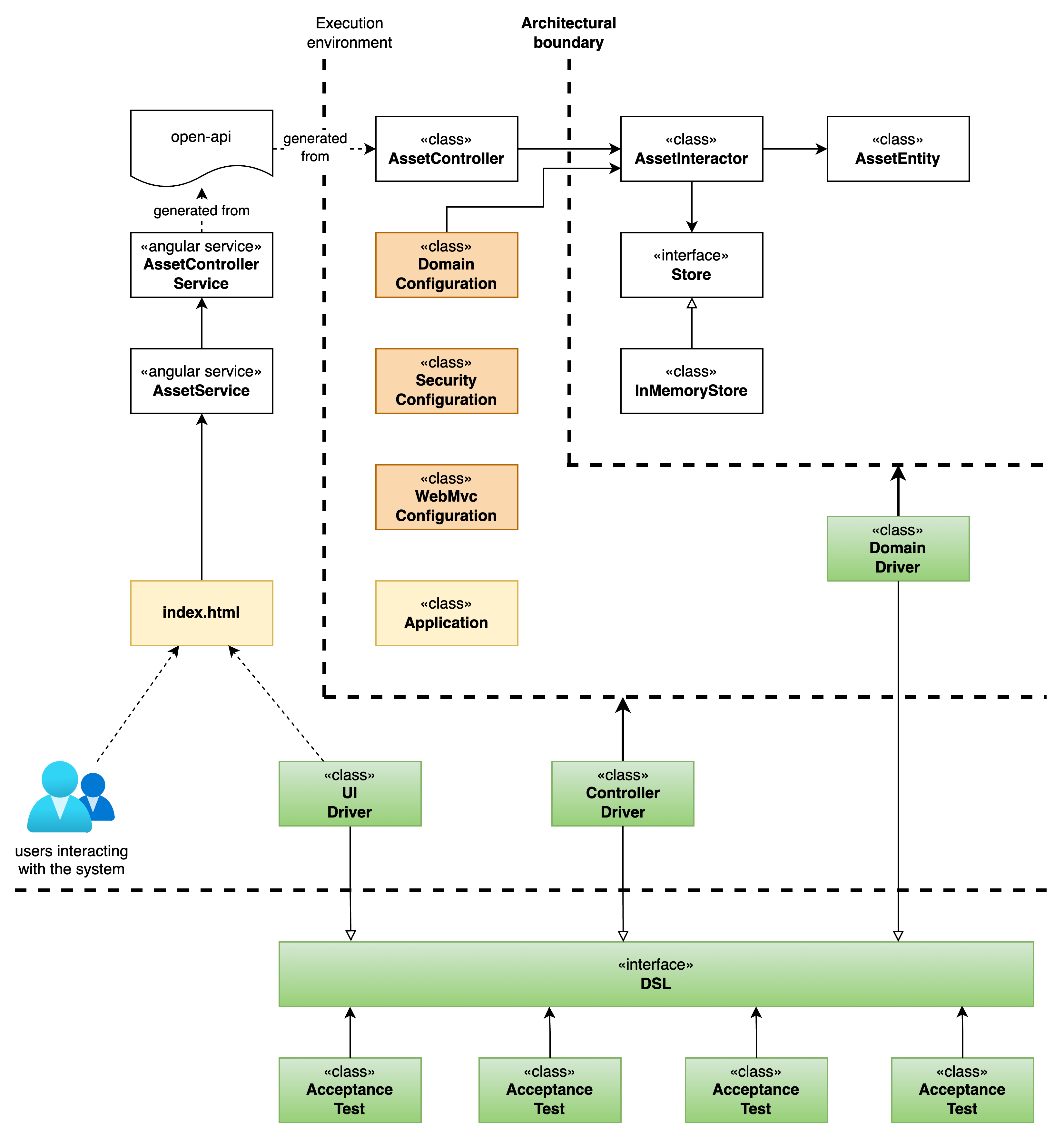

The architecture of the system is following several guidelines that are widely known in the industry and usually also part of broader approaches like micro service architecture, event driven architectures and so on. The building blocks of this project are well aligned with these broader concepts and support stability for behavior, reinforce ease of change and optionality for technicalities, like relational databases, messaging systems, etc.: - bounded context - architectural boundary - plugin architecture

These design decisions I consider helpful, but they are not crucial for bdd style acceptance tests. BDD style acceptance tests can alway be supplied. It is crucial to understand, how beautifully simple and yet powerful the concept of Dave Farley's 4 layer architecture for acceptance tests really is.

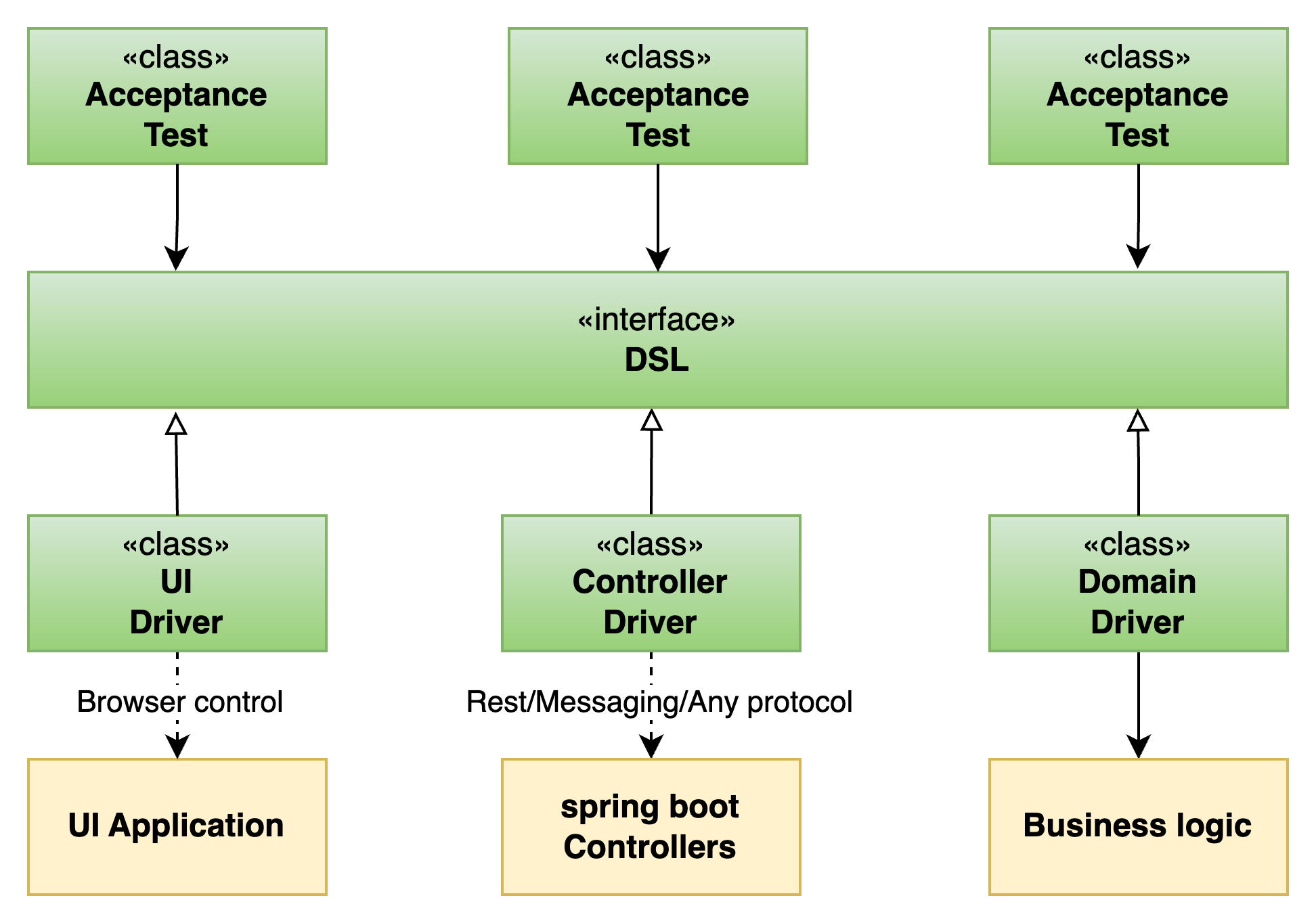

Layer 1 - The executable specification

The tests represent the executable specification of observable behavior, written in readable form by using the building blocks defined in layer 2. These tests describe nothing at all, how a solution is solved, it just describes what the problem is and if the system under test solves it.

Layer 2 - DSL - domain specific language

The building blocks of the test architecture, actions and assertions understandable by both business and engineers, that realize the behavioral blocks the system exposes to the user. If the system allows to create an order, or register a consultant, that would exactly be the definitons the DSL consists of. No implementation detail, just the smallest pieces of observable behavior that can be put together to describe a scenario.

Layer 3 - The protocol drivers

The translation layer, translating DSL building blocks into a language and sequence of commands, the underlying layer - the system under test - can understand. This is the only layer in the test architecture, that is coupled to the implementation detail of the very layer under test.

Layer 4 - The system under test

In the scope of the project, there is not a running system, however still three different types of deployables are target of testing: - Domain - the business rules. - Controller - the backend application exposing REST controllers. - UI - the user interface providing access for the users of the system.

Purpose

This expresses system behavior as scenarios.

Acceptance tests answer: - Can a user do X? - What happens if a precondition is missing? - What is observable at the system boundary?

Location

acceptance/

└── src/test/java/at/steell/mystuff/acceptance/

├── scenario/

│ ├── AbstractAssetAcceptanceTest.java

│ └── AssetControllerAcceptanceTest.java

├── driver/

├── dsl/

└── oauth/

AbstractAssetAcceptanceTest

This class is the behavioral contract for asset-related functionality.

It defines scenarios such as: - unauthenticated users cannot create assets, - authenticated users can create assets, - listing assets reflects system state.

The abstraction is intentional: - inheritors may vary infrastructure or execution context, - behavior remains identical.

This is BDD-style thinking: - shared intent - multiple realizations

package at.steell.mystuff.acceptance.scenario;

import at.steell.mystuff.acceptance.driver.MyStuffAcceptanceDriver;

import at.steell.mystuff.acceptance.dsl.MyStuffAcceptanceDsl.AssetOptions;

import org.junit.jupiter.api.Test;

import static org.junit.jupiter.api.Assertions.assertDoesNotThrow;

public abstract class AbstractAssetAcceptanceTest {

private final MyStuffAcceptanceDriver driver;

public AbstractAssetAcceptanceTest(final MyStuffAcceptanceDriver aDriver) {

driver = aDriver;

}

protected MyStuffAcceptanceDriver getDriver() {

return driver;

}

@Test

void createAssetWithoutAuthentication() {

assertDoesNotThrow(() -> driver.createAsset(new AssetOptions(null)));

}

@Test

void authenticateUser() {

driver.authenticateAsUser(driver.userA());

driver.assertThatUserIsAuthenticated(driver.userA());

}

private String createAssetWithUser(final String userName) {

driver.authenticateAsUser(userName);

return driver.createAsset(new AssetOptions(userName));

}

@Test

void createAsset() {

driver.assertThatAssetExists(createAssetWithUser(driver.userA()));

}

@Test

void unauthenticatedCannotRead() {

String assetId = createAssetWithUser(driver.userA());

driver.assertThatAssetExists(assetId);

driver.unauthenticateUser();

driver.assertAssetNotReadable(assetId);

}

@Test

void otherCannotRead() {

String assetId = createAssetWithUser(driver.userA());

driver.assertThatAssetExists(assetId);

driver.authenticateAsUser(driver.userB());

driver.assertAssetNotReadable(assetId);

}

@Test

void listNoAssetsWithoutAuthentication() {

driver.authenticateAsUser(driver.userA());

driver.createAsset(new AssetOptions(driver.userA()));

driver.unauthenticateUser();

assertDoesNotThrow(driver::noUserAssetsWithoutAuthentication);

}

@Test

void listAssetsDifferentAuthentication_emptyResults() {

driver.authenticateAsUser(driver.userA());

driver.createAsset(new AssetOptions(driver.userA()));

driver.unauthenticateUser();

driver.authenticateAsUser(driver.userB());

driver.listUserAssets(driver.userB());

driver.assertListOfAssetsIsEmpty();

}

@Test

void listAssets_containsCreated() {

driver.authenticateAsUser(driver.userA());

String assetId = driver.createAsset(new AssetOptions(driver.userA()));

driver.listUserAssets(driver.userA());

driver.assertListOfAssetsContains(assetId);

}

}

Scenarios do not know how the system is called — only what happens. This creates a huge value for the sustainability of the system: the Test is always right, given the expected behavior did not change. No matter the outcome of the test execution, it is always correct.

In our example, as long as a User should be able to create an Asset, the corresponding test will always be correct. In case the execution fails, it may only be the execution environment or the driver implementation thats wrong.

{blurb, class: information}

BDD style acceptance tests create determinism!

This is a huge benefit, compared to other, non deterministic approaches to software development, where the QA department is lost in fact finding missions when behavioral outcomes do not match - bugs/indicents and defects are the result.

Drivers extend DSL

acceptance/.../driver/

Drivers encapsulate: - HTTP calls, - authentication setup, - request construction.

While the DSL creates the building blocks to create Tests from, the Driver implements the DSL for executing in an operational environment.

This additional interface is one way to control the variables.

In this example I need two different kinds of Users to align with functional criteria that is specific to the user interacting with the system.

This interface is the place for this concept of controlling the variables.

package at.steell.mystuff.acceptance.driver;

import at.steell.mystuff.acceptance.dsl.MyStuffAcceptanceDsl;

import java.util.Collection;

import java.util.Set;

import static org.junit.jupiter.api.Assertions.assertTrue;

public interface MyStuffAcceptanceDriver extends MyStuffAcceptanceDsl {

ThreadLocal<Collection<String>> CURRENT_ASSET_IDS = ThreadLocal.withInitial(Set::of);

@Override

default void assertListOfAssetsIsEmpty() {

assertTrue(CURRENT_ASSET_IDS.get().isEmpty());

}

default String userA() {

return "User A";

}

default String userB() {

return "User B";

}

}

Domain Driver and Test

The domain driver is the implementation translation between DSL and the business rules. Hence the execution environment is very easy to setup.

Domain Driver

package at.steell.mystuff.acceptance.driver;

import at.steell.mystuff.domain.entity.Asset;

import at.steell.mystuff.domain.exception.NotReadable;

import at.steell.mystuff.domain.interactor.AssetInteractor;

import at.steell.mystuff.domain.interactor.AssetInteractor.AssetInteractorFactory;

import at.steell.mystuff.domain.interactor.AssetInteractor.NoOwner;

import at.steell.mystuff.domain.store.InMemoryAssetStore;

import java.util.logging.Logger;

import java.util.stream.Collectors;

import static org.junit.jupiter.api.Assertions.assertEquals;

import static org.junit.jupiter.api.Assertions.assertNotNull;

import static org.junit.jupiter.api.Assertions.assertThrows;

import static org.junit.jupiter.api.Assertions.assertTrue;

public class MyStuffDomainDriver implements MyStuffAcceptanceDriver {

private static final Logger LOGGER = Logger.getLogger(MyStuffDomainDriver.class.getName());

private final AssetInteractor assetInteractor = new AssetInteractorFactory()

.withAssetStore(new InMemoryAssetStore())

.create();

private String currentUsername = null;

@Override

public void authenticateAsUser(final String username) {

currentUsername = username;

LOGGER.info("authenticating as user " + username);

assertEquals(username, currentUsername);

}

@Override

public void unauthenticateUser() {

LOGGER.info("unauthenticating " + currentUsername);

currentUsername = null;

}

@Override

public void assertThatUserIsAuthenticated(final String username) {

LOGGER.info("authenticated user: " + currentUsername

+ ", asserted user: " + username);

assertEquals(currentUsername, username);

}

private String noUserCreateAsset() {

assertThrows(NoOwner.class, () -> assetInteractor.createAsset(null));

return null;

}

private String defaultCreateAsset() {

return assetInteractor.createAsset(currentUsername);

}

@Override

public String createAsset(final AssetOptions assetOptions) {

return assetOptions != null && assetOptions.authenticatedUser() != null

? defaultCreateAsset()

: noUserCreateAsset();

}

@Override

public void assertThatAssetExists(final String assetId) {

Asset asset = assetInteractor.find(assetId, currentUsername);

assertNotNull(asset);

assertEquals(assetId, asset.id());

}

@Override

public void assertAssetNotReadable(final String assetId) {

assertThrows(NotReadable.class, () -> assetInteractor.find(assetId, currentUsername));

}

@Override

public void listUserAssets(final String authenticatedUser) {

CURRENT_ASSET_IDS.set(assetInteractor.listAssets(authenticatedUser)

.stream().map(Asset::id)

.collect(Collectors.toSet()));

}

@Override

public void noUserAssetsWithoutAuthentication() {

assertThrows(RuntimeException.class, () -> assetInteractor.listAssets(null));

}

@Override

public void assertListOfAssetsContains(final String assetId) {

assertTrue(CURRENT_ASSET_IDS.get().contains(assetId));

}

}

Domain Acceptance Test

package at.steell.mystuff.acceptance.scenario;

import at.steell.mystuff.acceptance.driver.MyStuffDomainDriver;

class AssetDomainAcceptanceTest extends AbstractAssetAcceptanceTest {

AssetDomainAcceptanceTest() {

super(new MyStuffDomainDriver());

}

}

Controller Driver and Test

The controller driver performs tests against the REST Controllers of the system. This acts as a template too. In case the integration between user interface and business logic would setup on AMQP, JNDI, JMX, SOAP-XML or any other protocol, it is still easily possible to provide a driver to be able to verify our assumptions by executing these tests given the corresponding driver.

Controller Driver

package at.steell.mystuff.acceptance.driver;

import at.steell.mystuff.application.web.AssetController.ListOfAssets;

import at.steell.mystuff.domain.entity.Asset;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.springframework.security.core.context.SecurityContextHolder;

import org.springframework.test.web.servlet.MockMvc;

import org.springframework.test.web.servlet.MvcResult;

import org.springframework.test.web.servlet.setup.MockMvcBuilders;

import org.springframework.web.context.WebApplicationContext;

import java.util.stream.Collectors;

import static at.steell.mystuff.application.user.UserSessionFromSecurityContextHelper.fromSpringSecurityContext;

import static at.steell.mystuff.application.utils.AssetControllerSupport.createOperation;

import static at.steell.mystuff.application.utils.AssetControllerSupport.findOperation;

import static at.steell.mystuff.application.utils.AssetControllerSupport.listOperation;

import static at.steell.mystuff.application.utils.SecurityMockUtils.setupOidcUserSecurityContext;

import static org.junit.jupiter.api.Assertions.assertEquals;

import static org.junit.jupiter.api.Assertions.assertTrue;

import static org.springframework.test.web.servlet.result.MockMvcResultMatchers.status;

public class MyStuffControllerDriver implements MyStuffAcceptanceDriver {

private static final ObjectMapper OBJECT_MAPPER = new ObjectMapper();

private final MockMvc mockMvc;

public MyStuffControllerDriver(final WebApplicationContext webApplicationContext) {

mockMvc = MockMvcBuilders.webAppContextSetup(webApplicationContext).build();

}

@Override

public void authenticateAsUser(final String username) {

setupOidcUserSecurityContext(username);

}

@Override

public void unauthenticateUser() {

SecurityContextHolder.clearContext();

}

@Override

public void assertThatUserIsAuthenticated(final String username) {

assertEquals(username, fromSpringSecurityContext().userName());

}

private String noUserCreateAsset() {

try {

mockMvc.perform(createOperation())

.andExpect(status().isUnauthorized());

} catch (Exception e) {

throw new RuntimeException(e);

}

return null;

}

private String defaultCreateAsset() {

try {

MvcResult response = mockMvc.perform(createOperation())

.andExpect(status().isOk())

.andReturn();

return response.getResponse().getContentAsString();

} catch (Exception e) {

throw new RuntimeException(e);

}

}

@Override

public String createAsset(final AssetOptions assetOptions) {

return assetOptions != null && assetOptions.authenticatedUser() != null

? defaultCreateAsset()

: noUserCreateAsset();

}

@Override

public void assertThatAssetExists(final String assetId) {

try {

mockMvc.perform(findOperation(assetId))

.andExpect(status().isOk());

} catch (Exception e) {

throw new RuntimeException(e);

}

}

@Override

public void assertAssetNotReadable(final String assetId) {

try {

mockMvc.perform(findOperation(assetId))

.andExpect(status().isForbidden());

} catch (Exception e) {

throw new RuntimeException(e);

}

}

@Override

public void listUserAssets(final String authenticatedUser) {

try {

String response = mockMvc.perform(listOperation())

.andExpect(status().isOk())

.andReturn().getResponse().getContentAsString();

ListOfAssets listOfAssets = OBJECT_MAPPER.readValue(response, ListOfAssets.class);

CURRENT_ASSET_IDS.set(listOfAssets.assets().stream().map(Asset::id)

.collect(Collectors.toSet()));

} catch (Exception e) {

throw new RuntimeException(e);

}

}

@Override

public void noUserAssetsWithoutAuthentication() {

try {

mockMvc.perform(listOperation())

.andExpect(status().isForbidden());

} catch (Exception e) {

throw new RuntimeException(e);

}

}

@Override

public void assertListOfAssetsContains(final String assetId) {

assertTrue(CURRENT_ASSET_IDS.get().contains(assetId));

}

}

Controller Test

The Test spins up an execution environment - the application in it's default configuration just on a random port, in order to support parallel verification on a central build environment.

package at.steell.mystuff.acceptance.scenario;

import at.steell.mystuff.acceptance.OauthTestSupport;

import at.steell.mystuff.acceptance.driver.MyStuffControllerDriver;

import at.steell.mystuff.application.MyStuffApplication;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.web.context.WebApplicationContext;

@SpringBootTest(webEnvironment = SpringBootTest.WebEnvironment.RANDOM_PORT, classes = MyStuffApplication.class)

public class AssetControllerAcceptanceTest extends AbstractAssetAcceptanceTest implements OauthTestSupport {

@Autowired

public AssetControllerAcceptanceTest(final WebApplicationContext webApplicationContext) {

super(new MyStuffControllerDriver(webApplicationContext));

}

}

UI Driver and Test

UI Driver

The UI driver utilizes Playwright and translates the constructs of the DSL against the user interface interactions.

package at.steell.mystuff.acceptance.driver;

import com.microsoft.playwright.BrowserContext;

import com.microsoft.playwright.BrowserType;

import com.microsoft.playwright.Page;

import com.microsoft.playwright.Playwright;

import com.microsoft.playwright.Tracing;

import com.microsoft.playwright.options.AriaRole;

import java.nio.file.Paths;

import java.util.List;

import java.util.Set;

import static org.junit.jupiter.api.Assertions.assertTrue;

public class MyStuffUiDriver implements MyStuffAcceptanceDriver {

private static final boolean HEADLESS = true;

private Playwright playwright;

private BrowserContext context;

private Page page;

private ServerUrl serverUrl;

@Override

public String userA() {

return "user1";

}

@Override

public String userB() {

return "user2";

}

private void setupContextTracing() {

context.tracing().start(new Tracing.StartOptions()

.setScreenshots(true)

.setSnapshots(true)

.setSources(true));

}

public void startContext() {

context = playwright.chromium()

.launch(new BrowserType.LaunchOptions()

.setHeadless(HEADLESS))

.newContext();

setupContextTracing();

}

public void stopContext(final String relativeArchivePath) {

context.tracing().stop(new Tracing.StopOptions()

.setPath(Paths.get(relativeArchivePath)));

context.close();

playwright.close();

}

public void setupPlaywright() {

playwright = Playwright.create();

}

public void closePlaywright() {

playwright.close();

}

public void setServerUrl(final ServerUrl aServerUrl) {

serverUrl = aServerUrl;

}

private void oauthLogin() {

page = context.newPage();

OauthUiLoginFlow oauthUiLoginFlow = new OauthUiLoginFlow(page, serverUrl);

oauthUiLoginFlow.login();

}

@Override

public void authenticateAsUser(final String username) {

oauthLogin();

}

@Override

public void unauthenticateUser() {

context.clearCookies();

page = context.newPage();

}

@Override

public void assertThatUserIsAuthenticated(final String username) {

page.navigate(serverUrl.baseUrl() + "/me");

assertTrue(page.locator("app-me .me-handle").textContent().contains("@" + username));

}

private void keycloakLoginPageIsVisible() {

page.getByRole(AriaRole.TEXTBOX, new Page.GetByRoleOptions().setName("Username")).isVisible();

page.getByRole(AriaRole.TEXTBOX, new Page.GetByRoleOptions().setName("Password")).isVisible();

}

private String noUserCreateAsset() {

page = context.newPage();

keycloakLoginPageIsVisible();

return null;

}

private String defaultCreateAsset() {

page.navigate(serverUrl.baseUrl() + "/assets/create");

page.getByTestId("create-asset-button").click();

return page.getByTestId("creation-result").locator("span.mono").textContent().trim();

}

@Override

public String createAsset(final AssetOptions assetOptions) {

return assetOptions != null && assetOptions.authenticatedUser() != null

? defaultCreateAsset()

: noUserCreateAsset();

}

@Override

public void assertThatAssetExists(final String assetId) {

page.navigate(serverUrl.baseUrl() + "/assets/detail");

page.getByRole(AriaRole.TEXTBOX).fill(assetId);

page.getByRole(AriaRole.BUTTON).click();

assertTrue(page.getByTestId("asset-detail").textContent().contains(assetId));

}

@Override

public void assertAssetNotReadable(final String assetId) {

keycloakLoginPageIsVisible();

}

@Override

public void noUserAssetsWithoutAuthentication() {

keycloakLoginPageIsVisible();

}

@Override

public void listUserAssets(final String authenticatedUser) {

CURRENT_ASSET_IDS.set(Set.of());

page.navigate(serverUrl.baseUrl() + "/assets/list");

page.waitForResponse("**/api/assets/list",

() -> page.getByRole(AriaRole.BUTTON).click());

List<String> displayedIds = page.locator("[data-testid='asset-row'] .mono")

.allTextContents()

.stream()

.map(String::trim)

.toList();

CURRENT_ASSET_IDS.set(displayedIds);

}

@Override

public void assertListOfAssetsContains(final String assetId) {

assertTrue(CURRENT_ASSET_IDS.get().contains(assetId));

}

}

UI Acceptance Test

This test spins up a spring-boot application, hosting also the user interface and finally uses Playwright for automating browser interaction.

package at.steell.mystuff.acceptance.scenario;

import at.steell.mystuff.acceptance.OauthTestSupport;

import at.steell.mystuff.acceptance.driver.MyStuffUiDriver;

import at.steell.mystuff.acceptance.driver.ServerUrl;

import at.steell.mystuff.application.MyStuffApplication;

import org.junit.jupiter.api.AfterEach;

import org.junit.jupiter.api.BeforeEach;

import org.junit.jupiter.api.Tag;

import org.junit.jupiter.api.Test;

import org.junit.jupiter.api.TestInfo;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.boot.web.server.servlet.context.ServletWebServerApplicationContext;

import org.springframework.web.context.WebApplicationContex t;

import static org.junit.jupiter.api.Assertions.assertThrows;

@Tag("acceptanceTest")

@SpringBootTest(webEnvironment = SpringBootTest.WebEnvironment.RANDOM_PORT, classes = MyStuffApplication.class)

class AssetUiAcceptanceTest extends AbstractAssetAcceptanceTest implements OauthTestSupport {

private final ServletWebServerApplicationContext servletWebServerApplicationContext;

private final WebApplicationContext webApplicationContext;

@Autowired

AssetUiAcceptanceTest(

final ServletWebServerApplicationContext testServletWebServerApplicationContext,

final WebApplicationContext testWebApplicationContext) {

super(new MyStuffUiDriver());

servletWebServerApplicationContext = testServletWebServerApplicationContext;

webApplicationContext = testWebApplicationContext;

}

@BeforeEach

void setupPlaywright() {

getUiDriver().setupPlaywright();

getUiDriver().startContext();

setupServerPortAndContextPath();

}

private MyStuffUiDriver getUiDriver() {

return (MyStuffUiDriver) getDriver();

}

private String testArchiveRelativePath(final TestInfo testInfo) {

return "target/" + testInfo.getTestMethod().orElseThrow().getName() + ".zip";

}

@AfterEach

void stopTracing(final TestInfo testInfo) {

getUiDriver().stopContext(testArchiveRelativePath(testInfo));

getUiDriver().closePlaywright();

}

private void setupServerPortAndContextPath() {

int serverPort = servletWebServerApplicationContext.getWebServer().getPort();

String contextPath = webApplicationContext.getEnvironment()

.getProperty("server.servlet.context-path", "");

getUiDriver().setServerUrl(new ServerUrl(serverPort, contextPath));

}

private void unreachable() {

throw new UnsupportedOperationException("This is not reachable in the user interface");

}

@Override

@Test

void listAssetsDifferentAuthentication_emptyResults() {

assertThrows(UnsupportedOperationException.class, this::unreachable);

}

}

DSL

acceptance/.../dsl/

The DSL composes driver calls into readable building blocks.

Example intent (conceptually):

- authenticate user

- create asset

- list assets

- assert empty / assert contains

This keeps scenarios: - readable, - intention-focused, - stable under refactoring.

Probably most important, DSL and Test together are, with some exercise, read- and understandable by anyone in the domain. Business and technical people, Software Engineers, Project Managers, merely anybody in an organization, familiar with the domain will be able to know instantly and exactly what a Test expresses.

Example

// Test

@Test

void unauthenticatedCannotRead() {

String assetId = createAssetWithUser(driver.userA());

driver.assertThatAssetExists(assetId);

driver.unauthenticateUser();

driver.assertAssetNotReadable(assetId);

}

// DSL

String createAsset(AssetOptions assetOptions);

void assertThatAssetExists(String assetId);

void assertAssetNotReadable(String assetId);

void listUserAssets(String authenticatedUser);

void noUserAssetsWithoutAuthentication();

void assertListOfAssetsIsEmpty();

void assertListOfAssetsContains(String assetId);

Even though the Test is written in Java programming language, everybody connected to the behavior of the System can read and understand the intended purpose easily.

UI Acceptance Module — End-to-End Confidence

In my example, I deliberately chose to automate with Playwright (see https://playwright.dev/), but don't get me wrong, I could also have used other end-to-end testing framework for automating tests in the user interface.

The whole setup is incorporated to spin off a spring-boot backend application in a production like setup in with the build and browser automate the set of acceptance tests against this layer of the application.

Purpose

UI acceptance tests validate: - frontend + backend integration, - security propagation, - real user flows.

They are: - slower, - more expensive, - but essential for confidence.

They are therefore not run in the commit, but the acceptance stage of the build pipeline.

Location

ui-acceptance/

└── src/test/java/.../AssetUiAcceptanceTest.java

Frontend (ng-frontend) — Independent, Testable, Replaceable

Location

ng-frontend/

The frontend is treated as: - a first-class artifact, - independently buildable, - independently testable.

This reinforces the idea that:

architecture is about enabling change.

Continuous Integration Pipeline

Enforcing the Habits.

Location

.github/workflows/

Commit Stage

The commit-stage workflow: - builds the system, - runs unit and lower-level tests, - executes static code analysis (Sonar).

It is designed to be: - fast, - mandatory, - non-negotiable.

Green means safe to integrate.

Acceptance Stage

The acceptance-stage workflow: - runs acceptance and UI acceptance tests, - validates real system behavior.

It trades speed for confidence and is intentionally separated to protect flow.

Why These Artifacts Matter Together

Each artifact alone is useful. Together, they form a feedback system:

- TDD keeps design clean and changeable.

- Acceptance tests keep intent stable.

- CI enforces standards automatically.

- Trunk-based development becomes safe.

This is not “extra process”. This is how you move fast without breaking things.

Closing Note

This example is not meant to be copied line by line.

It is meant to be understood and adapted.

The structure, separation, and test strategy are the transferable lessons — not the specific class names.

Principles over process.

If interested, checkout the demo project, available on github: https://github.com/my-ellersdorfer-at/my-stuff.

Evidence-Driven Progress, Metrics, and Organizational Impact

The practices demonstrated in this appendix do more than improve code quality. They enable evidence-based leadership across the organization.

When Test Driven Development, BDD-style acceptance tests, CI/CD, and trunk-based development are combined, the delivery pipeline itself becomes a continuous reporting system.

This section connects that reporting capability to: - DORA metrics, - concrete management-level artifacts, - and the broader themes of this book.

Acceptance Tests as the Foundation of Meaningful Metrics

Traditional project metrics often answer the wrong questions: - “How busy are people?” - “How much code was written?” - “How far along do we feel?”

Acceptance tests answer better questions: - “What can users actually do today?” - “What behavior is stable?” - “What changed since the last release?”

Because acceptance tests execute automatically on every build, they produce objective, repeatable evidence.

Alignment with DORA Metrics

The acceptance-test-driven delivery pipeline maps naturally to the four key DORA metrics.

Lead Time for Changes

Acceptance tests shorten lead time by: - enabling safe, small changes (Chapter 4: Test Driven Development), - validating behavior automatically at every integration point (Chapter 5: Continuous Integration).

A change is “done” when acceptance scenarios pass — not when work feels complete.

Deployment Frequency

High deployment frequency becomes feasible because: - trunk-based development minimizes merge risk (Chapter 5: Flow vs. Process), - acceptance tests provide immediate confidence in user-visible behavior.

Frequent deployment is not bravery. It is the result of safety.

Change Failure Rate

Acceptance tests reduce change failure rate by: - detecting behavioral regressions immediately, - protecting existing functionality during refactoring, modernization, or tool replacement (Chapter 4: Refactoring).

Failures become visible early — long before users encounter them.

Mean Time to Restore (MTTR)

When a failure occurs: - acceptance tests identify which behavior broke, - recent commits are small and traceable, - restoration is focused and fast.

MTTR improves not because teams “work harder”, but because systems are observable.

Example: Acceptance-Test–Based Progress Report

The following artifacts can be generated automatically from the CI pipeline and shared with teams, management, and — where appropriate — customers.

Table: Acceptance Summary per Build

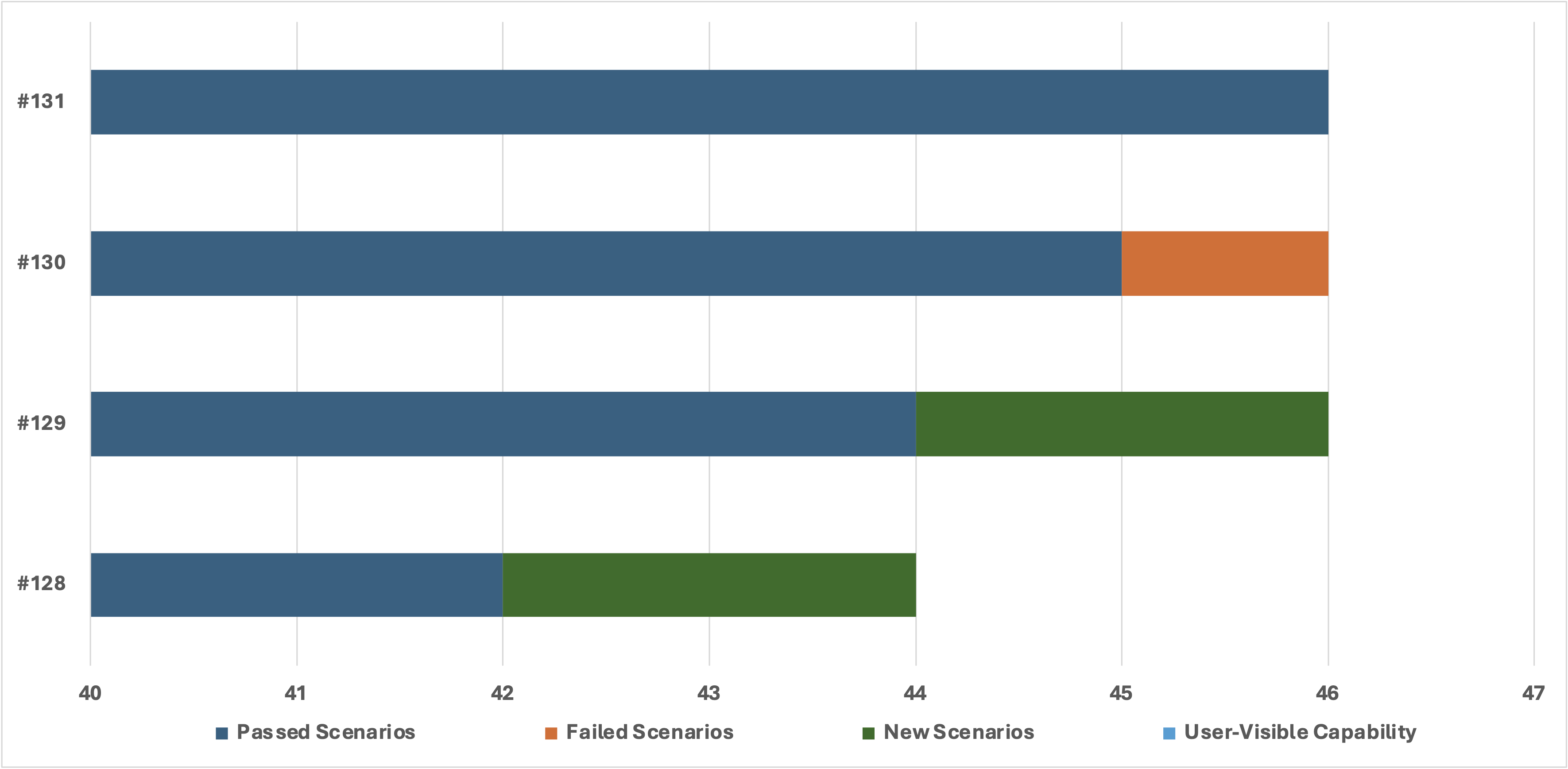

| Build | Passed Scenarios | Failed Scenarios | New Scenarios | User-Visible Capability |

|---|---|---|---|---|

| #131 | 46 | 0 | 0 | Regression fixed |

| #130 | 45 | 1 | 0 | Regression detected |

| #129 | 44 | 0 | +2 | Authentication enforced |

| #128 | 42 | 0 | +2 | Asset creation + listing |

This report answers, at a glance: - Are we progressing? - Did anything break? - What changed for the user? - Upward trends represent growing system capability. - Drops indicate detected regressions — not failures of people, but successes of the safety system.

This visualization is particularly powerful for management: it shows progress and stability simultaneously.

No interpretation. No blame.

Transparency Without Blame — Reinforced by Evidence

Psychological safety is essential for high-performing teams. But safety must coexist with professionalism.

Acceptance-test-based reporting enables both:

- Teams feel safe to change the system because regressions are detected early.

- Management feels safe to trust progress because it is observable.

- Customers feel safe to rely on the system because behavior is protected.

Transparency is no longer a demand. It is a side effect of how the system is built.

This directly reinforces:

- Chapter 3: Ethics, practices, and good habits of programmers

- Chapter 6–11: Psychological safety, curiosity, adaptive intelligence, and empowered teams

Long-Term System Resilience

Over long time horizons, systems inevitably change: - frameworks are replaced, - architectures evolve, - teams change, - business priorities shift.

Acceptance tests remain constant.

They act as an active safety net that: - prevents accidental loss of functionality, - preserves organizational knowledge in executable form, - enables modernization without fear.

In this sense, acceptance tests are not just a quality practice. They are strategic risk mitigation.

Opportunities by Organizational Level

Software Development Teams

- Safe refactoring and modernization (Chapter 4)

- Clear definition of done via acceptance criteria (Chapter 5)

- Reduced cognitive load through automation

Middle Management

- Objective progress reporting without micromanagement

- Early visibility into risk and stability

- Evidence-based coordination across teams (Chapter 7–9)

Top Management

- Trust grounded in observable outcomes

- Alignment of delivery with business value

- Improved DORA metrics without coercion

No Heroes Required

Before closing this appendix, one misunderstanding must be addressed explicitly:

There is absolutely no need — and no place — for hero developers, 10x developers, or individual saviors.

This book has consistently argued (see Chapter 3: Ethics, Practices, and Good Habits of Programmers) that professionalism in software development is not defined by brilliance under pressure, but by reliability over time.

What high-performing software organizations actually need is: - sustainable throughput, - consistently high quality, - and systems that remain changeable for years.

Heroics are not a strength. They are a warning sign that fundamentals are missing.

Agile Development as Risk Management

(See Chapter 4: Test Driven Development)

The purpose of agile software development is not speed for its own sake. It is risk management through fast feedback.

Risk is reduced by: - fast feedback, - based on high-quality work, - validated continuously by tests and automation.

When feedback is fast and reliable, discussions about scope fundamentally change. Scope is no longer defended, negotiated, or frozen prematurely.

Instead: - scope grows continuously, - decisions adapt to reality, - value emerges incrementally.

This is not achieved by perfect upfront planning. It is achieved by learning faster than the system changes.

Quality Enables Speed — Never the Other Way Around

- Only quality allows us to go fast.

- Only good design allows us to maintain quality.

- Only changeable systems allow sustained delivery.

Knowledge of languages, frameworks, and tools is valuable. But without discipline and good habits, that knowledge erodes quickly.

The practices described in Chapter 4 (TDD, refactoring, simple design) exist precisely to protect quality under change.

The Habits That Actually Matter

The example in this appendix demonstrates habits that scale across teams and organizations:

- Working together — often on the same keyboard

- Test-driving design and development

- Optimizing relentlessly for fast feedback

- Describing acceptance criteria as executable specifications

- Automating everything that is boring

Boring means: even after 100 iterations, the result is always the same.

If results differ every time, it is not boring — it is a learning problem.

- Automation creates space for learning.

- Learning improves design.

- Good design preserves quality.

- Quality enables speed.

Sustainability Over Heroics

Hero-based systems collapse under: - growth, - change, - absence, - and time.

Habit-based systems improve with each iteration.

This is the central promise of the practices described throughout this book: they replace dependence on exceptional individuals with dependable systems.

Everybody can do this. No heroes are required.

- Just professionalism.

- Just discipline.

- Just fundamentals.

Related Article: SAST & DAST in the Pipeline